I am Huajie Tan (谭桦杰), a third-year M.S. student at the School of Computer Science, Peking University, advised by Prof. Shanghang Zhang. Previously, I received my dual-degree B.Eng. from Tianjin University (College of Intelligence and Computing & College of Microelectronics) and was honored with the Outstanding Graduate Award.

My research focuses on embodied AI and multi-modal foundation models. I am currently an intern at the Beijing Academy of Artificial Intelligence (BAAI), exploring pathways toward general-purpose robotic intelligence and real-world deployment of embodied systems. I am also open to collaborative opportunities and research partnerships, feel free to email me: tanhuajie@stu.pku.edu.cn.

Meanwhile, I am now seeking entrepreneurship opportunities. I have received multiple top-tier offers from leading industry labs, e.g., Huawei Top Minds (华为天才少年), Tencent QingYun (腾讯青云), JD Tech Genius Team (京东TGT), BAAI Star (智源智星) and Xiaomi Top Talent (小米顶尖). If you have a better fit or would like to connect, please also feel free to reach out.

🔥 News

- 2026.02: 🎉 Both Robo-Dopamine and Action-Sketcher get accepted to CVPR 2026. See you in Denver, Colorado, USA!

- 2026.01: 🔥 Released RoboBrain 2.5, our more powerful embodied foundation model (first author, project lead).

- 2026.01: 🎯 Released our new research paper Action-Sketcher (first author, project lead).

- 2025.12: 🎯 Released our new research paper Robo-Dopamine (first author, project lead).

- 2025.10: 🔥 Released LLaVA-OneVision-1.5, a fully open framework for democratized MLLM training.

- 2025.10: 🔥 Released more advanced RoboOS-NeXT (first author, project lead).

- 2025.09: 🎉 Reason-RFT accepted to NeurIPS 2025. See you in San Diego, USA!

- 2025.06: 🎉 Released RoboBrain 2.0 and RoboOS in BAAI Conference 2025 (first author, project lead).

- 2025.04: 🌍 RoboBrain 1.0 selected for CVPR 2025’s official Embodied AI Trends Commentary.

- 2025.02: 🎉 RoboBrain 1.0 accepted to CVPR 2025.

📝 Publications

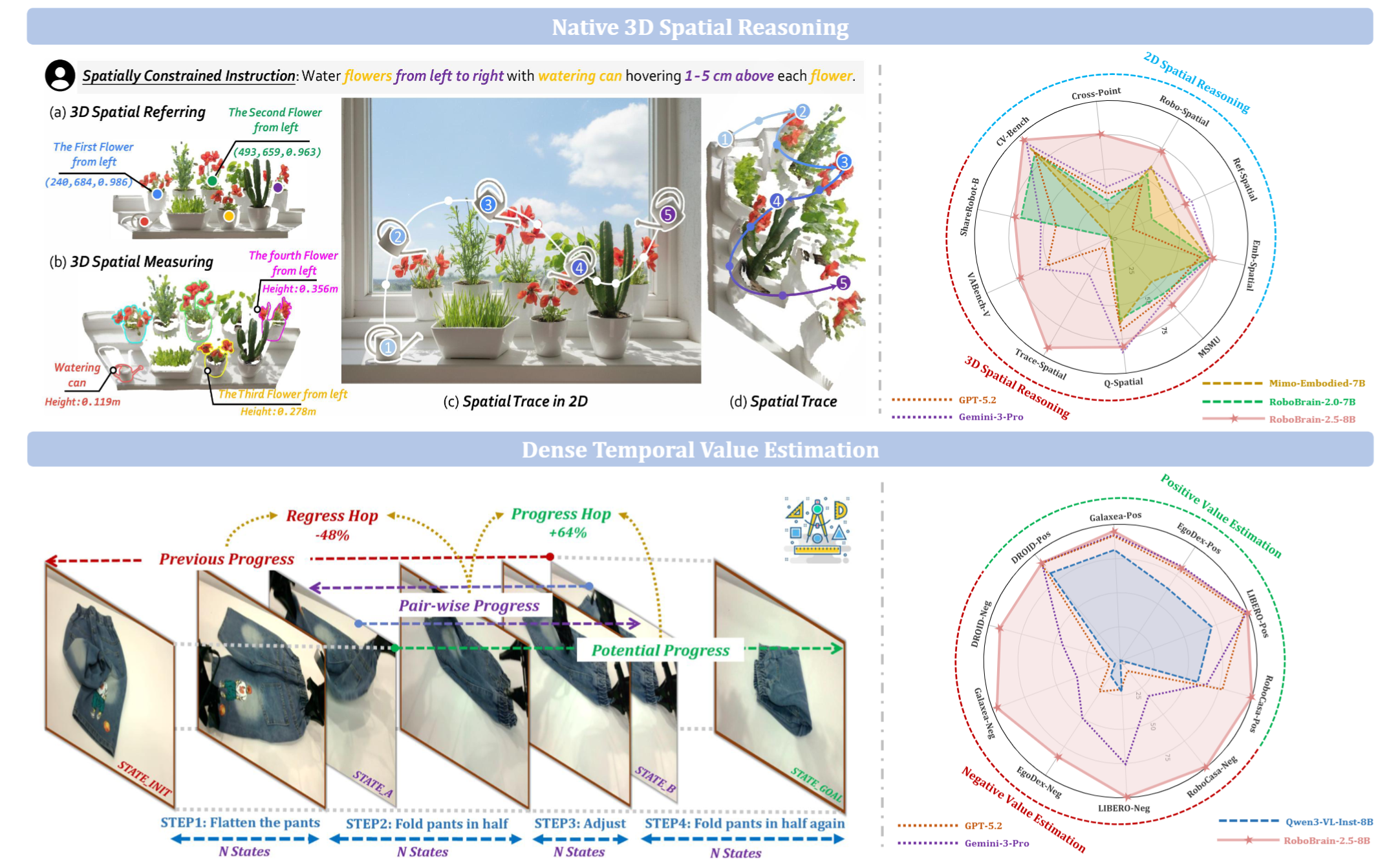

RoboBrain 2.5: Depth in Sight, Time in Mind.

BAAI RoboBrain Team

Co-First Author, Project Lead, Technical Report 2026

Project | Paper | Code

TL;DR: RoboBrain 2.5 is a next-generation embodied AI foundation model designed for complex robotic manipulation, driven by two major upgrades: (1) precise 3D spatial reasoning that generates depth-aware, physically-constrained manipulation traces, and (2) dense temporal value estimation that provides step-by-step execution feedback. Together, these advancements enable a more physically grounded and execution-aware intelligence for downstream learning tasks.

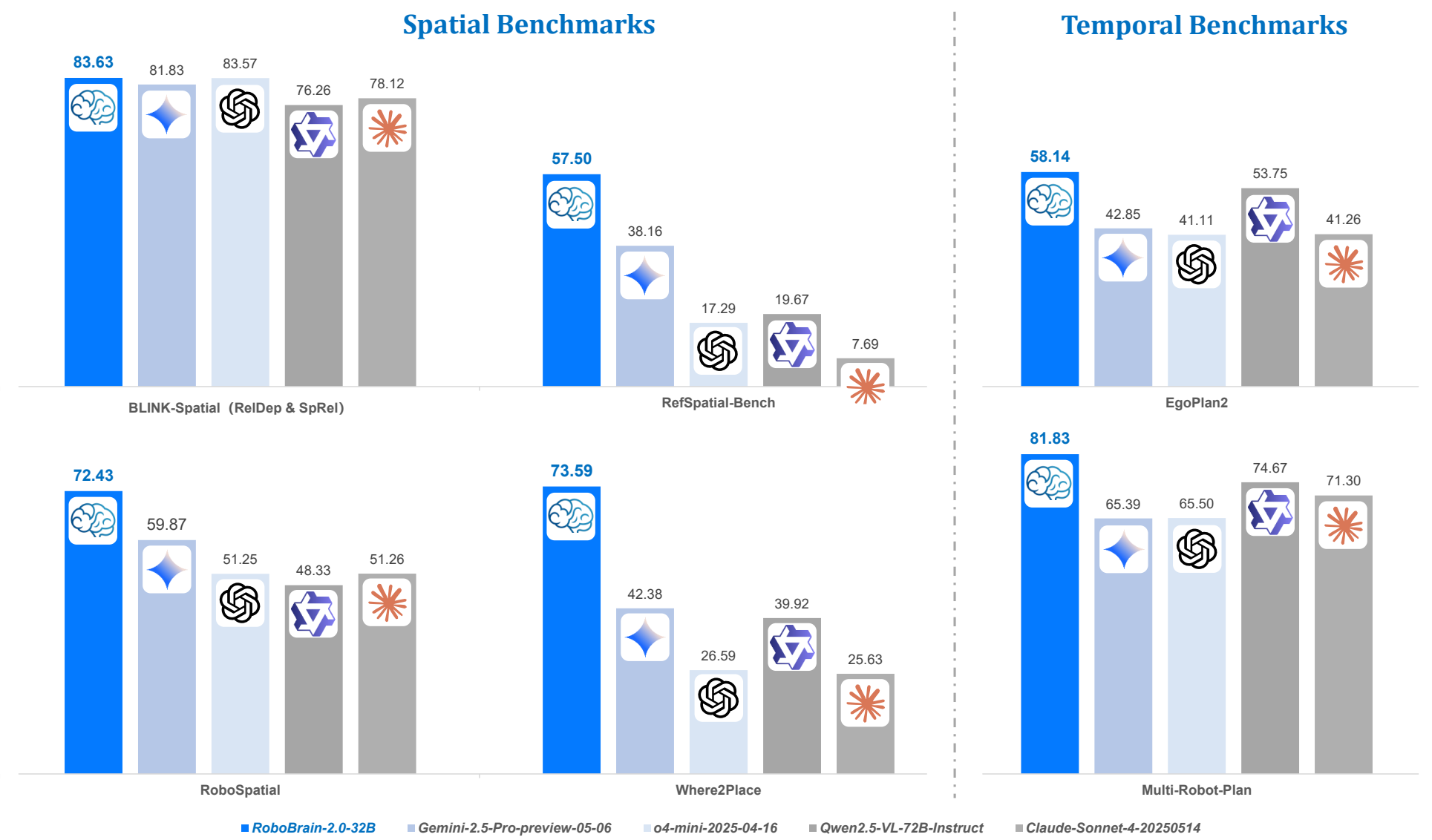

RoboBrain 2.0: See Better. Think Harder. Do Smarter.

BAAI RoboBrain Team

Co-First Author, Core Contributor, Technical Report 2025

Project | Paper | Code

TL;DR: RoboBrain 2.0 is the previously most powerful embodied brain model designed to unify perception, reasoning, and planning for complex physical tasks. Achieving top performance across diverse spatial and temporal benchmarks, it excels in critical real world capabilities including spatial understanding, trajectory forecasting, and multiple agent long horizon planning, marking a significant step toward developing generalist embodied agents.

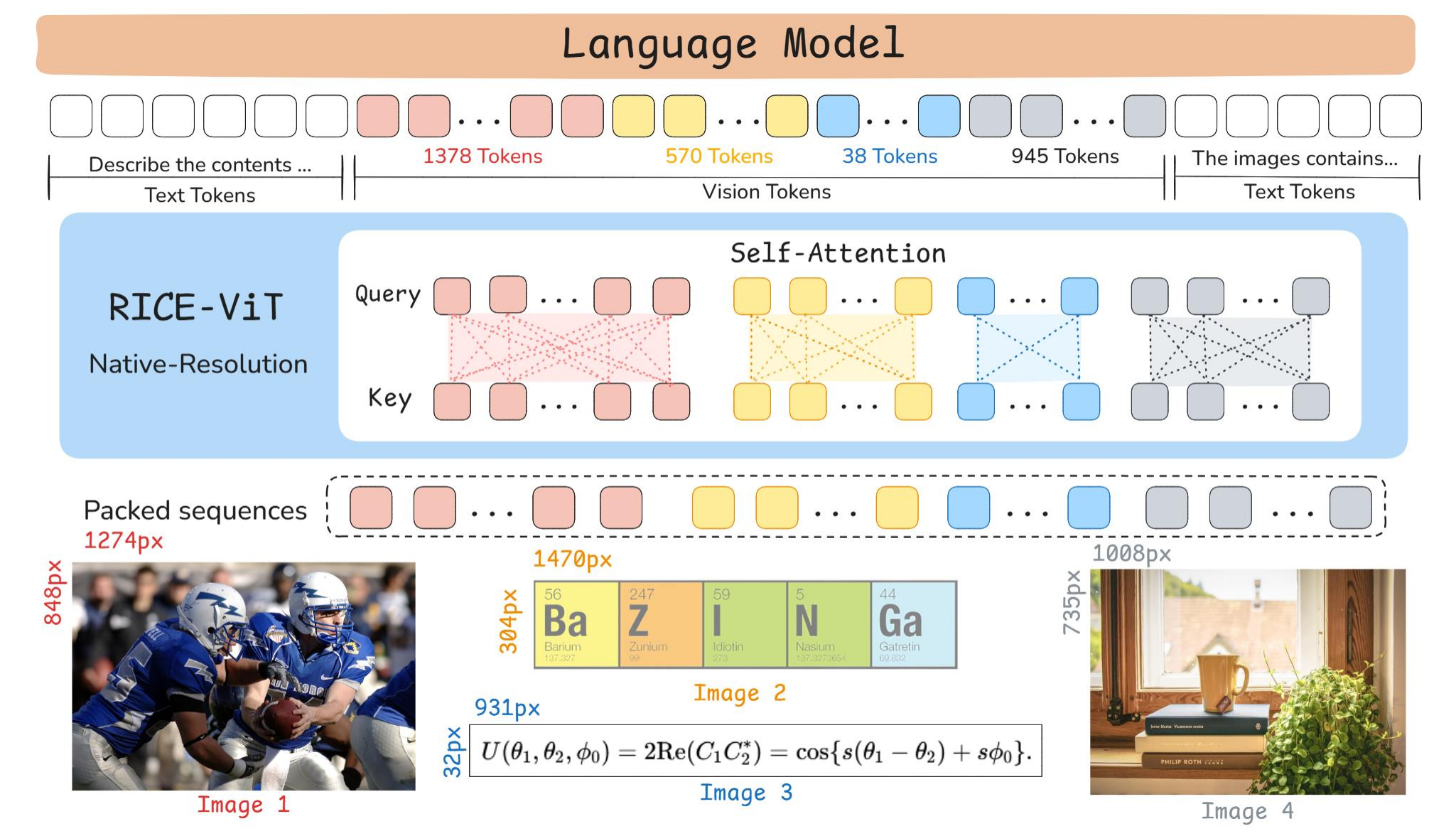

LLaVA-OneVision-1.5: Fully Open Framework for Democratized Multimodal Training

LLaVA-OneVision Community Contributors

Core Contributor, Technical Report 2025

Paper | Code

TL;DR: LLaVA-OneVision-1.5 is an open and highly efficient family of Large Multimodal Models that achieves state of the art performance with significantly reduced training costs. Powered by massive curated datasets and an optimized training framework developed under a $16,000 budget, the models consistently outperform competitors like Qwen2.5-VL across numerous benchmarks.

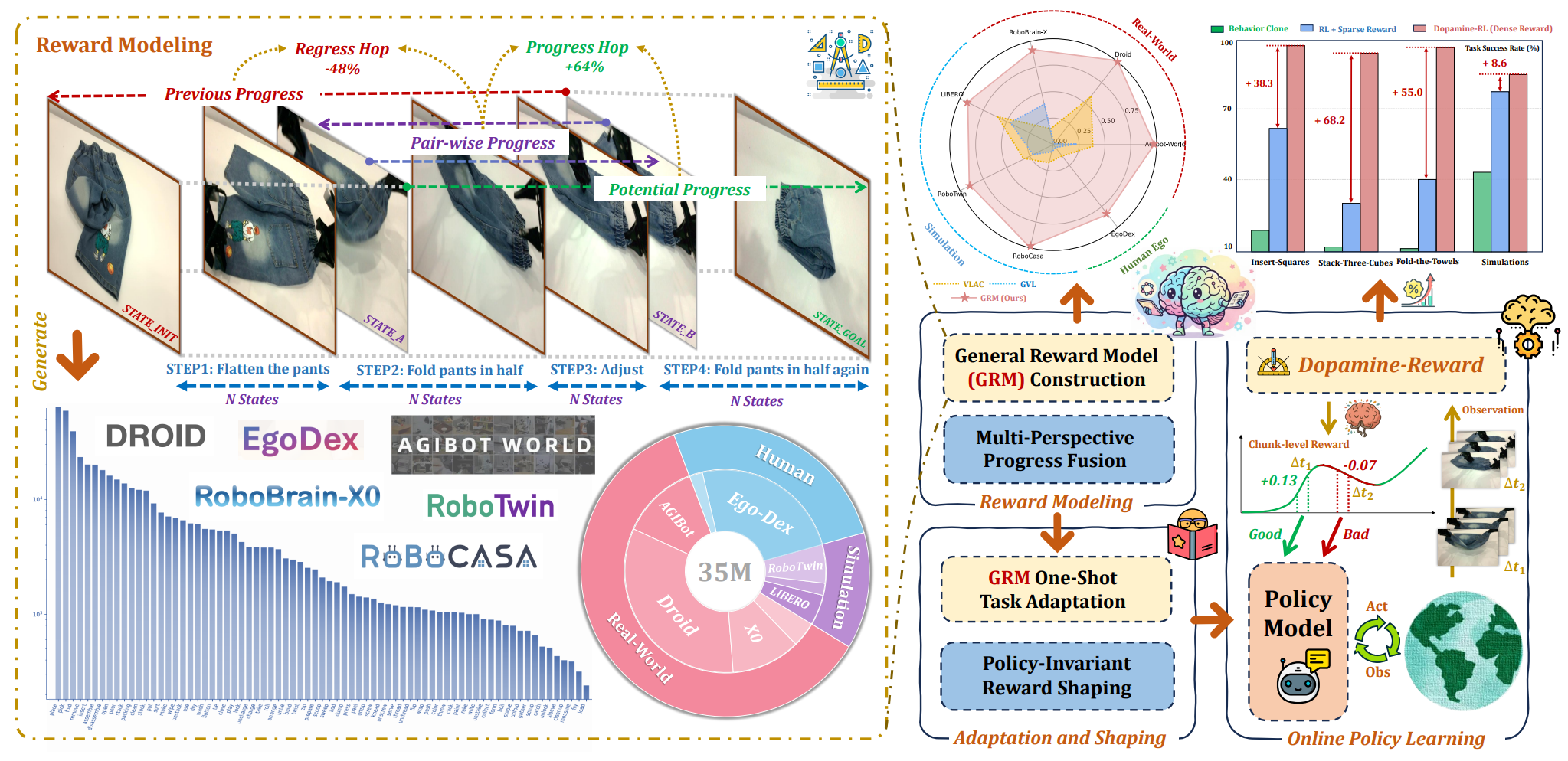

Robo-Dopamine: General Process Reward Modeling for High-Precision Robotic Manipulation

Huajie Tan*, Sixiang Chen*, Yijie Xu*, Zixiao Wang, Yuheng Ji, Cheng Chi, Yaoxu Lyu, Zhongxia Zhao, Xiansheng Chen, Peterson Co, Shaoxuan Xie, Guocai Yao, Pengwei Wang, Zhongyuan Wang, Shanghang Zhang

Co-First Author, Project Lead, CVPR 2026

Project | Paper | Code

TL;DR: Joy is dopamine’s handiwork—whether in humans or in robotics.

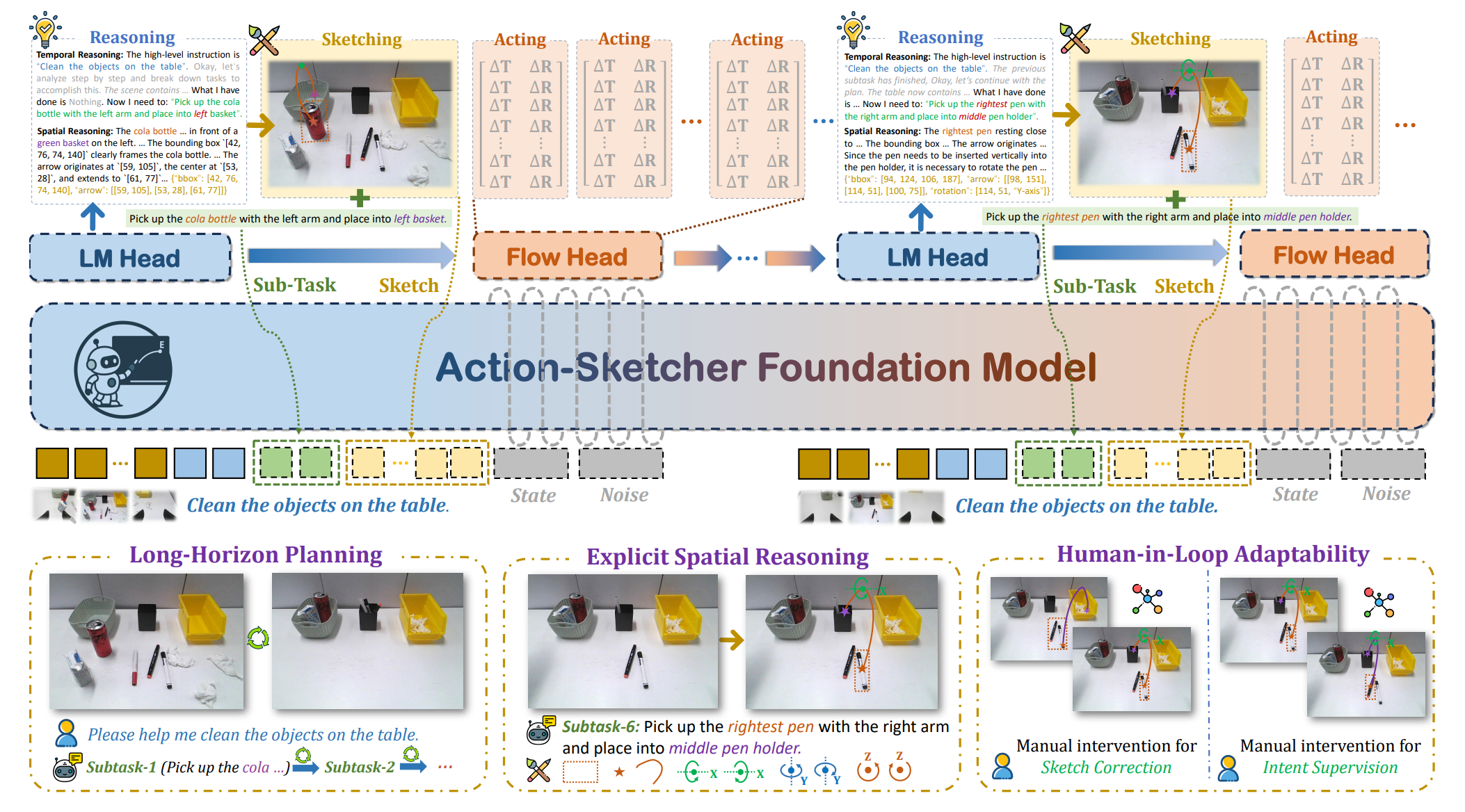

Action-Sketcher: From Reasoning to Action via Visual Sketches for Long-Horizon Robotic Manipulation

Huajie Tan*, Peterson Co*, Yijie Xu*, Shanyu Rong, Yuheng Ji, Cheng Chi, Xiansheng Chen, Qiongyu Zhang, Zhongxia Zhao, Pengwei Wang, Zhongyuan Wang, Shanghang Zhang

Co-First Author, Project Lead, CVPR 2026

TL;DR: Make intent visible. Make action reliable.

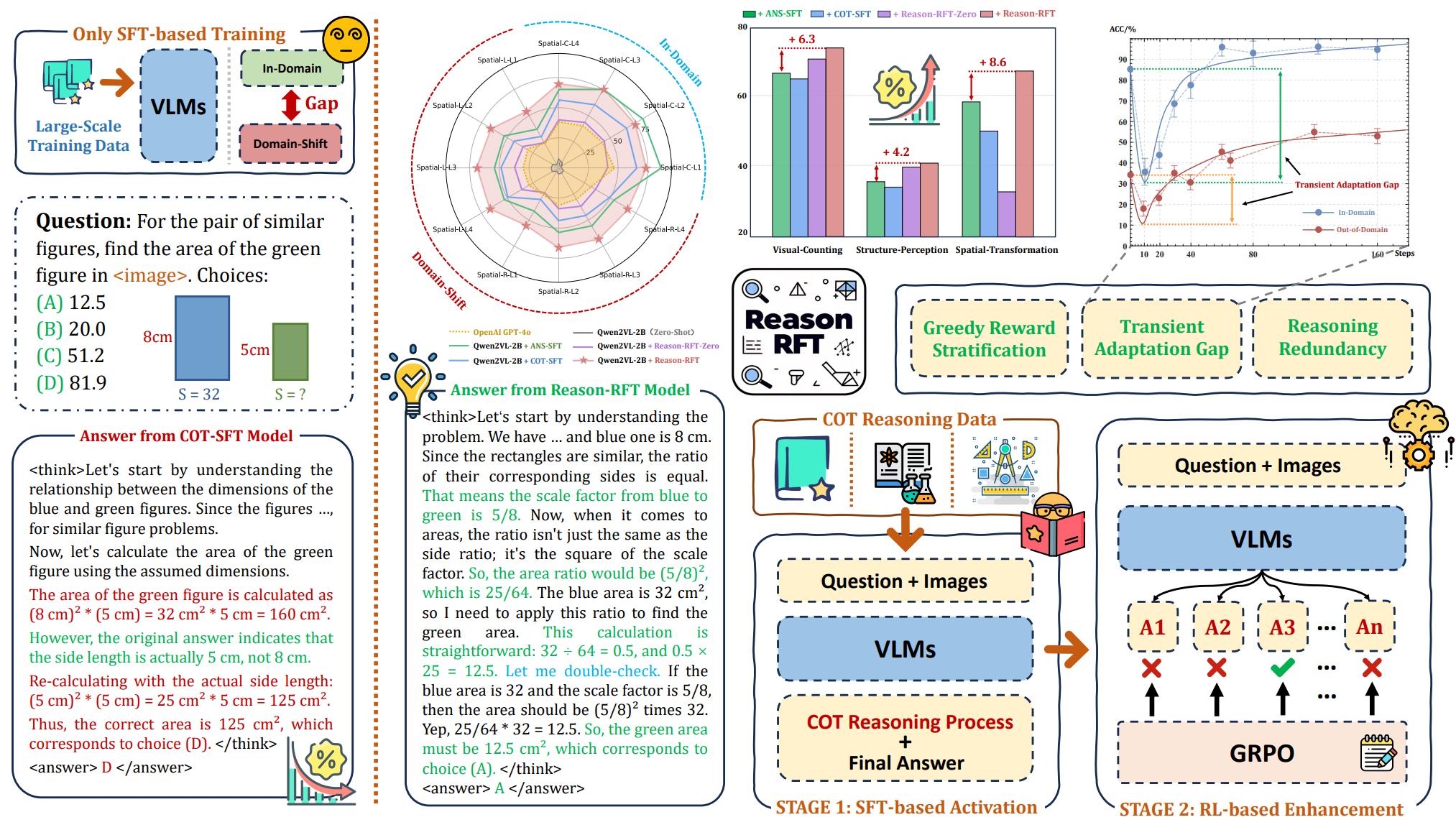

Reason-RFT: Reinforcement Fine-Tuning for Visual Reasoning of Vision Language Models

Huajie Tan*, Yuheng Ji*, Xiaoshuai Hao*, Xiansheng Chen, Pengwei Wang, Zhongyuan Wang, Shanghang Zhang

Co-First Author, NeurIPS 2025

Project | Paper | Code

TL;DR: Reason-RFT is a pioneering two-stage RFT framework that enhances visual reasoning in VLMs, delivering superior performance, data efficiency, and robust generalization under domain shifts across various complex tasks.

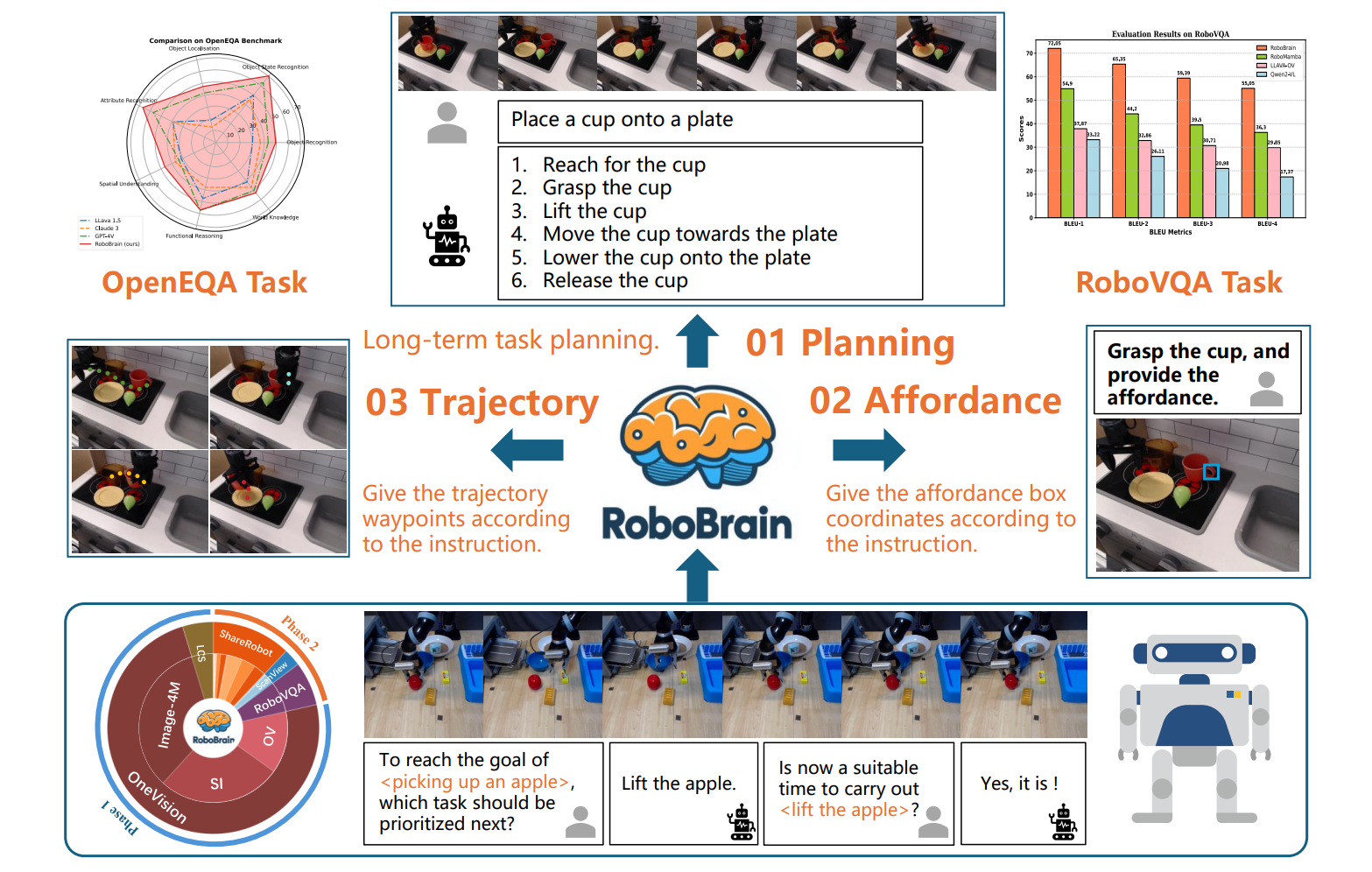

RoboBrain: A Unified Brain Model for Robotic Manipulation from Abstract to Concrete

Yuheng Ji*, Huajie Tan*, Jiayu Shi*, Xiaoshuai Hao*, Yuan Zhang, Hengyuan Zhang, Pengwei Wang, Mengdi Zhao, Yao Mu, Pengju An, Xinda Xue, Qinghang Su, Huaihai Lyu, Xiaolong Zheng, Jiaming Liu, Zhongyuan Wang, Shanghang Zhang

Co-First Author, CVPR 2025

Project | Paper | Code

TL;DR: RoboBrain is the first unified embodied brain model, equiped with planning capability, affordance perception and trajectory prediction.

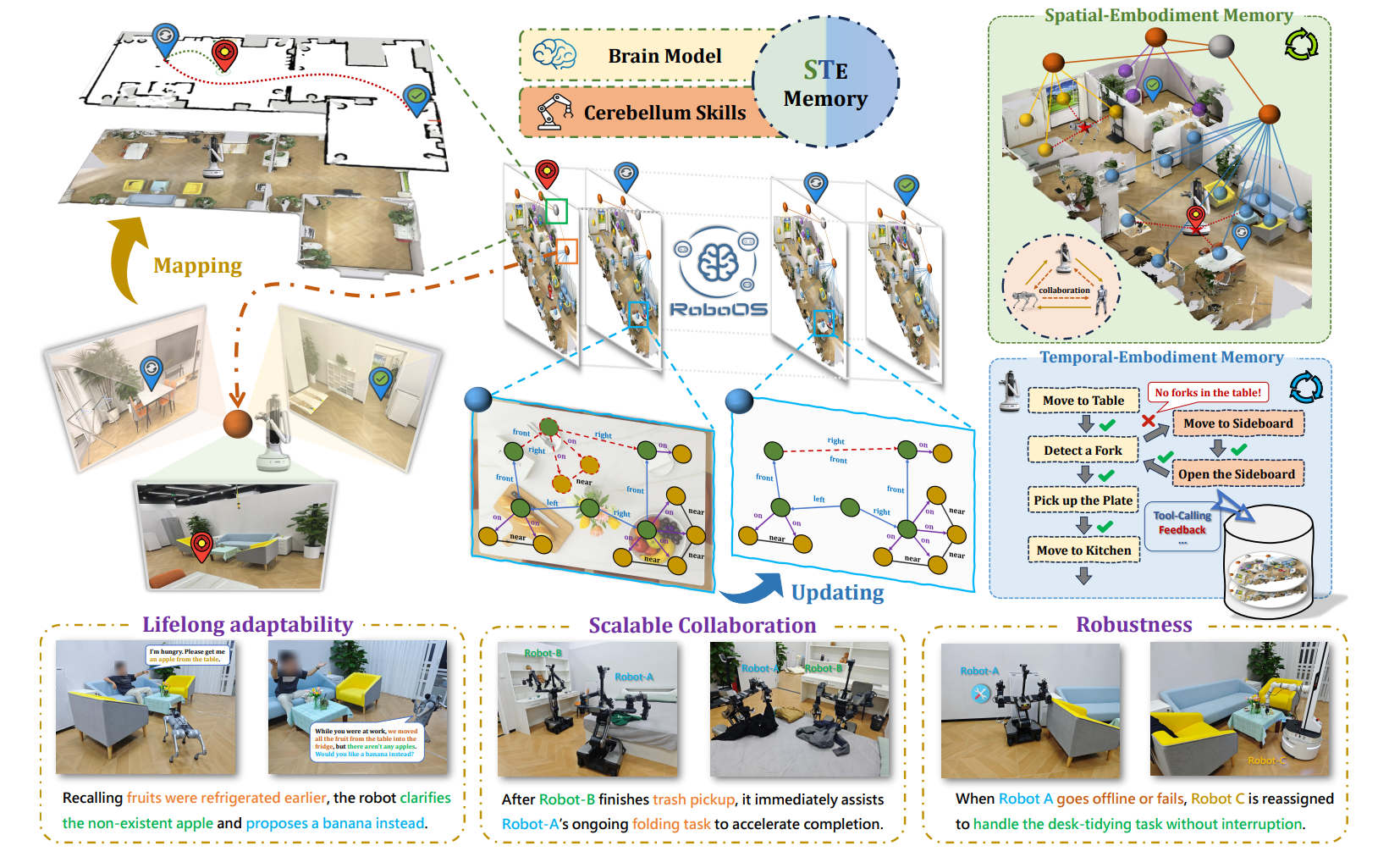

Huajie Tan*, Cheng Chi*, Xiansheng Chen*, Yuheng Ji*, Zhongxia Zhao, Xiaoshuai Hao, Yaoxu Lyu, Mingyu Cao, Junkai Zhao, Huaihai Lyu, Enshen Zhou, Ning Chen, Yankai Fu, Cheng Peng, Wei Guo, Dong Liang, Zhuo Chen, Mengsi Lyu, Chenrui He, Yulong Ao, Yonghua Lin, Pengwei Wang, Zhongyuan Wang, Shanghang Zhang

Co-First Author, Project Lead, ArXiv 2025

TL;DR: RoboOS-NeXT is a unified memory-based framework for lifelong, scalable, and robust multi-robot collaboration.

📖 Educations

- 2023.09 - 2026.06, Master, School of Computer Science, Peking University, Beijing.

- 2019.09 - 2023.06, Undergraduate, College of Intelligence and Computing & School of Microelectronics, Tianjin University, Tianjin.

💻 Internships

- 2024.12 - now, Beijing Academy of Artificial Intelligence (BAAI), Beijing.

- 2024.05 - 2024.11, DeepGlint, Beijing.

👥 Services

- Served as reviewer for CVPR, ICCV, NeurIPS, ICRA

- Active open-source contributor to huggingface/transformers, huggingface/smolagents

- Teaching Assistant, Fundamentals of Artificial Intelligence (Instructor: Prof. Shanghang Zhang)